23rd October 2025

Blog

Hijack addresses critical trend where almost half of people consult AI for medical advice.

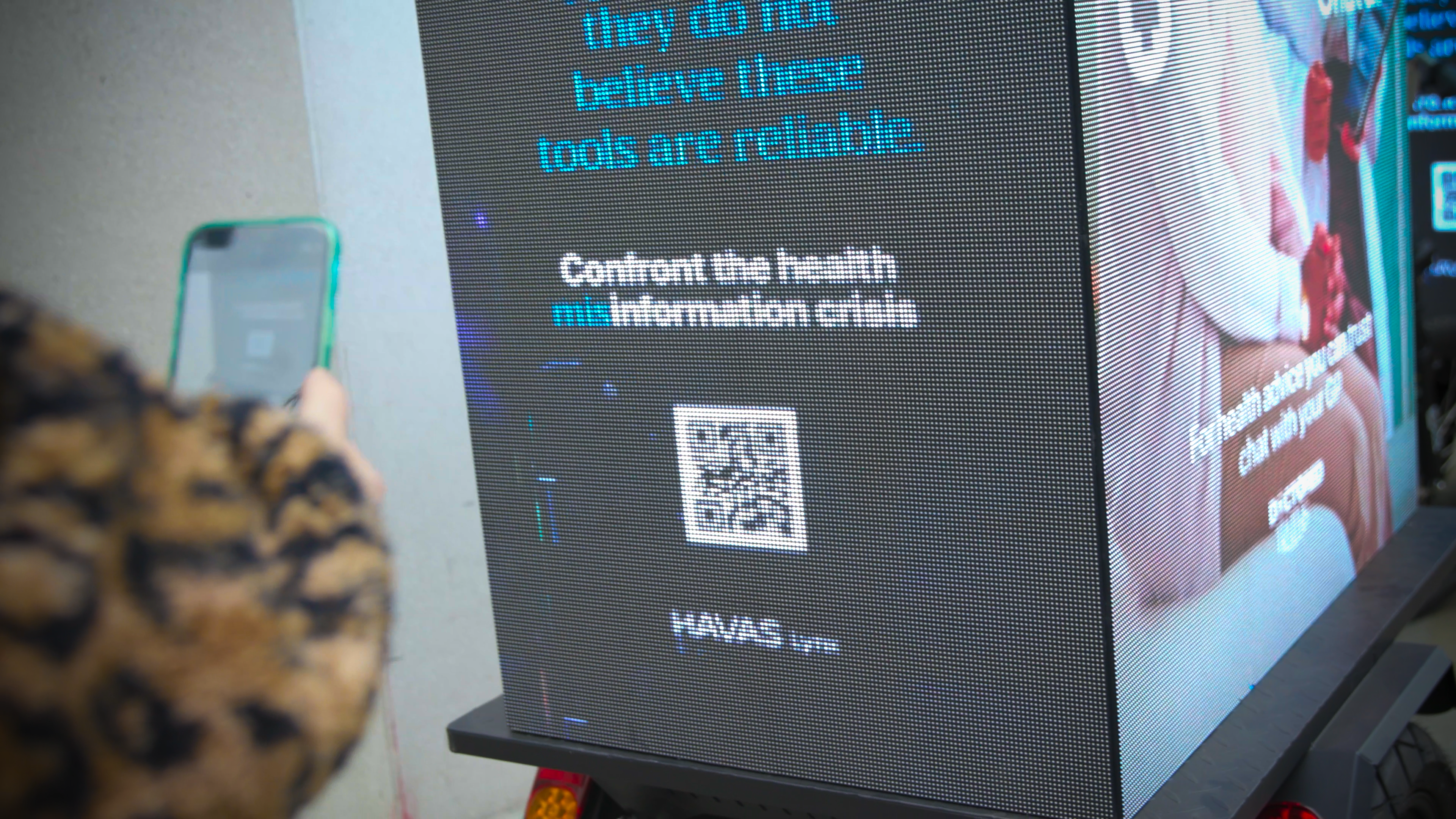

Developed as a direct extension of the ‘Doctored Truths’ white paper, this reactive out-of-home (OOH) activation, ‘Chat GP’. initiative directly confronts the rise of medical misinformation generated by artificial intelligence, reaffirming the general practitioner (GP) as the definitive source for trusted health advice.

The ‘Chat GP’ hijack addresses a critical trend identified by Point.1 data: while almost half of people consult AI for medical advice, a significant 44% doubt its reliability. Havas Lynx strategically ‘hijacked’ a leading AI company’s advertisements across Manchester, transforming the question “When you can ask AI anything, do you trust it with your health?” into a clear directive: For trusted medical advice, always chat to your GP.

“The rise of AI tools presents a new challenge in the fight against health misinformation,” states Claire Knapp, chief executive officer at Havas Lynx. “Whilst AI offers huge opportunities to modernise our healthcare service, our ‘Chat GP’ activation is a vital reminder that AI is not a substitute for human medical expertise. These tools are built for engagement, not patient safety, and relying on them for our health advice poses severe risks. ‘Doctored Truths’ highlights the erosion of trust in healthcare; this is a step to reclaim that trust and champion the invaluable role of our GPs.”

‘Chat GP’ reinforces the core message of the ‘Doctored Truths’ white paper, which identifies health misinformation as the world’s top short-term risk, undermining public trust and leading to tangible harm. Havas Lynx urges the pharmaceutical industry and broader healthcare ecosystem to join in leveraging scientific expertise to combat this growing threat to patient health.

Learn more about the ‘Chat GP’ hijack and the insights from the ‘Doctored Truths’ report, here.